Specify dynamic JSON content in ADF

This article shows how to utilise the json editor and key vault secret references in Azure Data factory (ADF) to provide an alternative experience for linked service connectors that do not have built-in parameterisation support.

The importance of linked service parameterisation

If done well, parameterising connection elements can bring value to the development process. The following is a list of some benefits and is by no means exhaustive.

Benefits

-

Ability to hide sensitive connection details

-

Encourages code reuse

-

May reduce the number of linked service connections required

ADF UI parameterisation

As this article is concerned with showing an alternative method using the json editor and key vault, we will not dwell on demonstrating the built-in parameterisation that is supported for a limited number of linked service connectors.

For more details on using the ADF user interface (UI) for parameterisation and supported connectors, see the resources section at the end of this article.

Specify dynamic content and reference key vault

Prerequisites

In the content we’re about to cover, we need to do the following to set up our resources:

1. Create an Azure Data Factory

2. Create an Azure Databricks workspace (trial is fine)

-

Create a bearer token and retain it for step 3

-

Create an interactive cluster and retain it for step3

-

Note the Azure Databricks workspace url and retain it for step 3

3. Create an Azure Key Vault

-

Add the Databricks bearer token as a secret in key vault

-

Add the Databricks workspace url as a secret in key vault

-

Grant access to the Azure Data Factory created in step 1 to get and list secrets in access policies

Set up

Create an ADF key vault service connection

1. Select the option to create a key vault linked service connection in the management hub, give your key vault a name and select the “Specify dynamic contents in JSON format” option. The result should look like the following image.

ADF advanced option

2. Create the key vault linked service by creating a parameter named baseUrl and referencing that parameter. The following image shows what that would look like.

Specify dynamic contents in JSON format option

3. Click on “Test connection”, pass the key vault url and hit “Ok” to test the changes.

4. Once validated, the connection should succeed and at this point, changes can be committed.

Test ADF connection

Create a Databricks service connection

To see how we can leverage key vault by specifying dynamic content in json format, we will use a Databricks service connector as the UI support for it slightly limited for referencing key vault (October 2020).

The following screenshot shows the default UI options for entering connection details.

Red boxes are of particular interest, as we want them to reference key vault secrets. This is so we can hide sensitive connection details about our Databricks environment away from unauthorised users. Rather than continue with the default set up let’s instead use the json editor as detailed in the following section.

Dynamic content referencing key vault

1. Like the key vault connection we created earlier, we will select the “Specify dynamic contents in JSON format” option in the Databricks linked service just created.

2. Paste the following code in the json editor.

specify_json_databricks_key_vault

.txt

Download TXT • 2KB

3. Let’s review the json we’ve just added:

- 3.1 A parameter is added for key vault url that has the secrets that will be referenced as shown below

Parameter addition

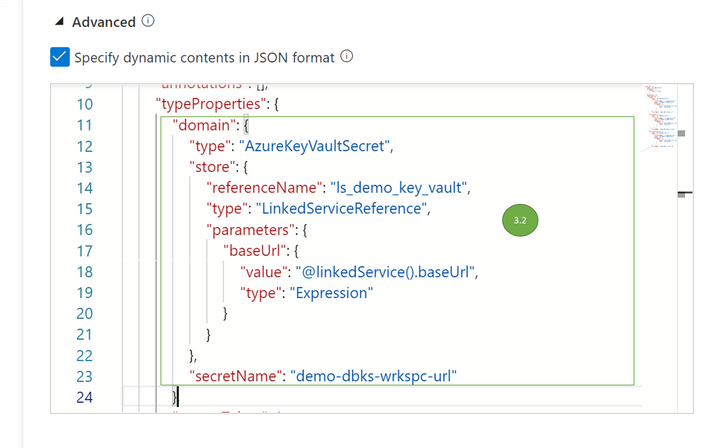

- 3.2 The following screenshot shows a reference to a key vault url secret named “demo-dbks-wkspc-url” that has the workspace url value

Json key vault domain reference

- 3.3 In the image with 3.3., we’re referencing a Databricks access token (bearer token) that is saved as a key vault secret named “demo-dbks-bearer-token”. The UI does provide built-in support for access token values to be referenced as key vault secrets.

Json key vault access token reference

- 3.4 The following screenshot shows how we get the value of the interactive Databricks cluster id by referencing the key vault secret named “demo-dbks-cluster-id”

Json key vault cluster id reference

- 3.5 The next image shows the testing of the Databricks linked service connection and the option to enter the key value url value.

Json key vault url value during connection test

- 3.6 The connection test should be successful if all references have been entered correctly as show below.

Test Databricks linked service connection

Review

Observing the implications of adding dynamic content and referencing key vault:

Pros

-

More flexible - Hard coded values that could change per environment have been avoided and instead, key vault secret names have been referenced

-

More reusable - Connections are parameterised enabling pipeline deployments and development work to be more flexible e.g. different secret values per environment

-

More secure. Hidden away sensitive information that should only be visible to users/applications that have access to the key vault where the secrets are stored

Cons

- Slightly awkward. In the absence of built-in parameterisation in the ADF UI, the approach may not appeal to users who prefer the UI.

Alternatives

As well as hard coding values, another way of avoiding hard coded values and retaining some flexibility could be via tokenisation which can be more awkward than what we have previously demonstrated and less secure.

Tokenisation replaces, typically at deploy time, a token (analogous to a secret) with a variable value (analogous to the secret value). Example of such a task in a DevOps pipeline can be found here.

In this case, the token value is exposed in the ADF development process but upon publishing changes to the Data Factory, the underlying value is exposed. Points to consider here are security (published ADF exposes token value) and a slightly more awkwardness in development (debug runs would fail if run against tokens and not the token value).

This may be the subject of a future post and arguably, one would rather employ such a more fiddly approach than purely hard coded values, however, tokenisation wouldn’t be my preferred option over referencing key vault.

Final thoughts

By specifying dynamic contents in the json editor, we’ve made our example Databricks linked service connection more flexible, as well more developer and administrator friendly. Try it out yourself and see what other linked services you’d want to extend this too.

Resources

Supported linked service connectors for built-in parameterisation

Parameterising linked services using built-in UI

Replace tokens task in Azure DevOps marketplace